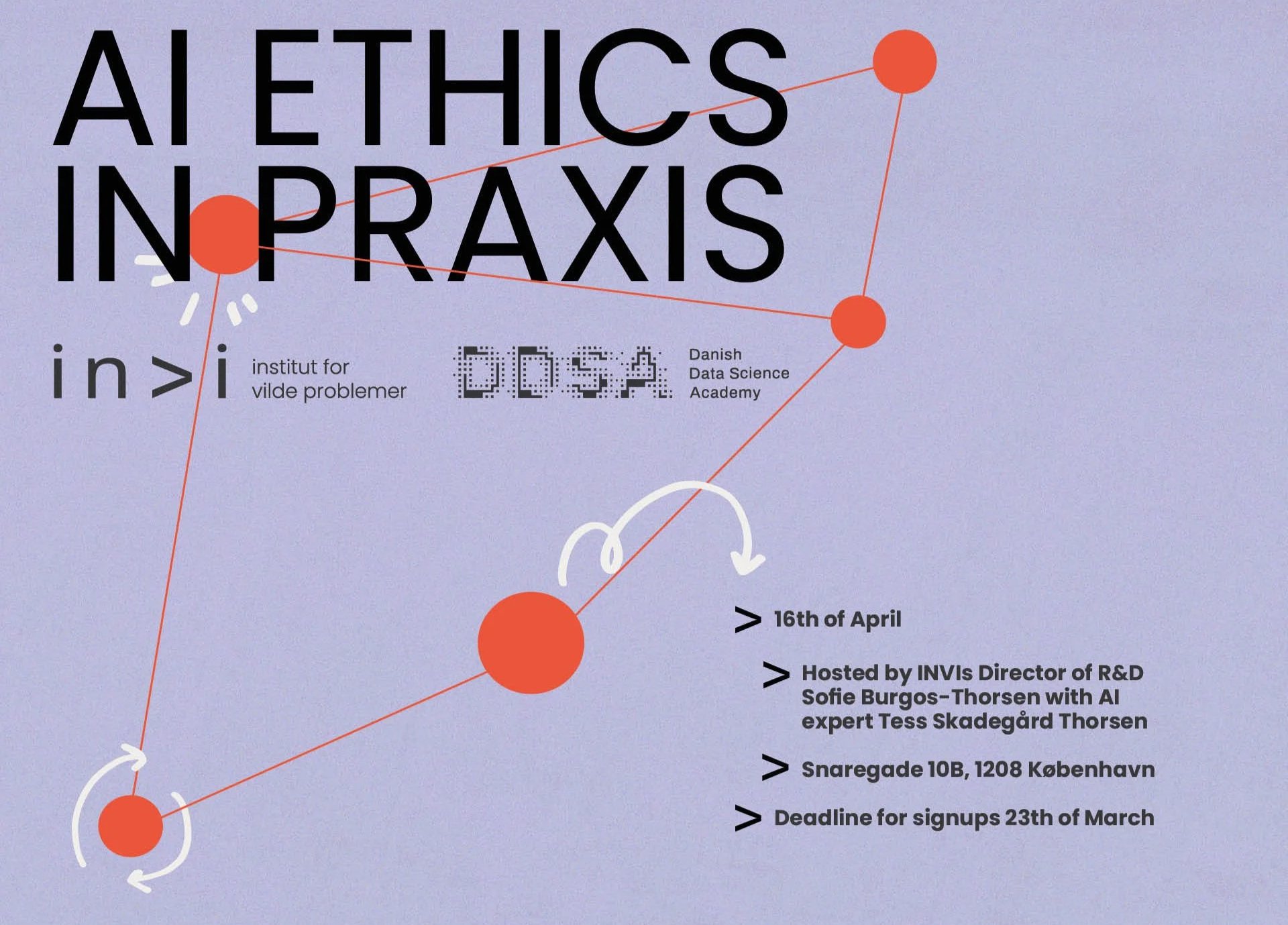

AI ethics in practice

A one-day masterclass for practitioners at the Institute for wicked that gives you a compass to navigate by when using AI in your organization.

AI systems are rapidly moving from pilot projects to becoming a central part of organizations' infrastructure. They shape decisions, workflows, services, and strategic priorities—often in ways that can be difficult to fully predict. Ethical issues are therefore no longer confined to conference rooms or academic debates. They arise in communication strategies, procurement processes, data flows, supplier collaborations, and implementation choices. Many professionals today bear real responsibility for these decisions but lack a clear framework for navigating the complexity. Often, it is not uncertainty about the potential of AI that slows organizations down, but uncertainty about how to act more responsibly while realizing that potential. Where do you start? How do you translate AI ethics into the organization—not just as a list of rules, but as a practice that connects innovation with the organization's core values?

AI Ethics in Practice is a one-day masterclass for professionals who are faced with precisely these questions. The course is held at the Institute for wicked (INVI) and is supported by the Danish Data Science Academy (DDSA). The course offers structured tools, shared reflection, and application-oriented frameworks that take the next step beyond a narrow focus on GDPR and data protection. Participants work with specific cases from their own organizational contexts. The goal is to equip you to translate ethical ambitions into concrete action, thus framing the masterclass AI Ethics as Practice: A space where principles are embedded in decisions and theory becomes lived organizational practice.

The course is taught by Sofie Burgos-Thorsen (PhD, Head of Research and Analysis at INVI) and Tess Skadegård Thorsen (PhD, expert in Tech and AI ethics). Two internationally recognized researchers and practitioners who combine academic weight with hands-on experience in AI innovation, implementation, and assessment. Based on Design Justice, Data Feminism, and critical data studies, they translate research-based perspectives into concrete tools that you can take back to your organization.

What are you bringing?

-

You will gain experience with and access to a toolbox designed for real organizational contexts, which makes ethical practice more concrete and easier to apply. You can take it back to your team and use it to support internal assessments and decision-making processes around AI. The toolbox contains:

An ethical compass for structured reflection and decision-making

Frameworks for assessing risks and grounds for discrimination

Tools to strengthen resilience, fairness, and responsible implementation

An annotated reading list for further development

The emphasis is on practical application. You will leave the course with methods that can support internal assessments, strategy work, procurement, implementation plans, and internal training.

-

The masterclass is based on research-based approaches such as Design Justice, Data Feminism, and critical data studies, but with a focus on how they can be operationalized in practice. You will learn to:

Identify ethical challenges early in AI initiatives

Structure dilemmas instead of reacting ad hoc

Go beyond compliance and work with responsible strategic decision-making

-

By working with specific cases—including your own—you will gain greater clarity on how to navigate uncertainty without unnecessarily slowing down innovation. You will leave the course better equipped to:

Facilitate internal conversations about AI ethics

Support colleagues and management in responsible choices

Engage in dialogue with suppliers and partners with clearer expectations

Align AI initiatives with organizational values and social responsibility

-

Working with ethical AI can feel like a lonely gig. The masterclass creates a space for sharing experiences across sectors and industries in Denmark. You'll be part of a community of professionals who face similar strategic and practical dilemmas—and who are committed to strengthening responsible AI practices together.

Who is the course for?

The masterclass is aimed at professionals with at least three years of experience in business, the public sector, NGOs, foundations, or larger organizations who are involved in shaping how AI is used in their organization or department. The course is not limited to those who work directly with data, AI, or technology. It can include anyone who is in a position to set the tone for the use of AI in the organization's work. You may be a communications manager struggling to figure out when, where, and how AI tools should (or should not) be used in your team. Perhaps you are a department head responsible for ensuring that AI initiatives comply with the EU AI Act and Danish regulations. Or you may be responsible for procurement or leading the implementation of AI systems in a municipality and feel the weight of the ethical decisions that come with it. The key is that you have influence over how AI is used—strategically, operationally, or culturally—and want tools to navigate ethical dilemmas in practice.

The course is particularly relevant if you:

Helps set the strategic direction for the use of AI in your team/organization

Teaches colleagues how to use AI (including compliance with the EU AI Act and Danish regulations)

Responsible for purchasing or supplier collaboration related to AI

Working with implementation, governance, or testing of AI-based technologies

Want more robust internal decision-making processes around AI

You do not need a technical background to participate, but you should have a desire to learn how to navigate the ethical dilemmas that arise when AI systems are used in professional contexts. We expect you to have a natural curiosity and willingness to critically examine how AI affects people, processes, and organizational values.

Participants are welcome from municipalities, public institutions, creative agencies, foundations, NGOs, and private companies (including SMEs and startups) throughout Denmark. Researchers and PhD students are also welcome, but in order to maintain a practical focus, the number of places for researchers is deliberately limited, and we encourage participants to bring an application-oriented case rather than theoretical or abstract questions.

Places are limited and will be allocated on a first-come, first-served basis. If you are unsure whether the course is right for you, please feel free to contact us.

Meet your instructors

Sofie Burgos-Thorsen (PhD, Chief Analyst at INVI)

Sofie is Head of Research and Analysis at INVI. With a background in sociology and more than ten years of experience in bridging the gap between research and practice, she works with applying AI, participatory methods, and digital design to drive innovation and social change. Her work focuses particularly on democratic innovation and policy and strategy processes where AI is used to address complex issues. Sofie holds a PhD in data-driven urban planning from Aalborg University and MIT and has taught internationally. She has received awards such as the Ziman Award (2020) and the EU Citizen Science Prize (2023). She has been a jury member at Ars Electronica and is currently vice-chair of the Danish Data Science Community's Open Source Committee. In the course, she draws on her research in Data Feminism and Design Justice, many years of experience teaching AI ethics at the university level, and a deep understanding of what it means to work with AI-driven innovation in specific organizational contexts.

Masterclass format

A detailed program will be shared with participants 1–2 weeks before the course, but the masterclass combines:

Short presentations setting the scene for ethical issues

Introduction to the ethical toolbox

Structured exercises based on your own cases

Exchange of experiences and facilitated reflection in groups

Time for networking and informal dialogue

Participants are invited to bring cases or dilemmas from their own work. Sofie and Tess will contact you a few weeks before the course and ask you to prepare your case.

INVI will provide morning coffee and bread, lunch, afternoon snacks, and coffee, as well as light wine/beer/soft drinks for networking in the afternoon.

Practical matters

Language

Based on a principle of inclusivity, the course is offered in English to enable more people to participate. English is the standard language for the presentations, but if you are more comfortable in Danish, you are encouraged to speak Danish in case studies and group discussions. If all participants are Danish speakers, the presentations can also be held in Danish.

Location

The masterclass will take place at the Institute for Wicked Problems (INVI), Snaregade 10B, 1208 Copenhagen K. Please note that there are stairs and no elevator.

How to sign up

Registration is done via the link on the website by filling out the registration form. You will receive a personal follow-up confirming your place as soon as possible. Places are limited and are allocated on a first-come, first-served basis.

Price (excluding VAT)

Public/private organizations and foundations: DKK 3,000

NGOs and self-employed persons: DKK 2,600

PhD students (max. 5 places): DKK 1,500

The masterclass is supported by the Danish Data Science Academy (DDSA), which makes it possible to significantly reduce the participation fee compared to many other AI courses.

Contact us

If you would like to know more or are curious about whether the course is suitable for you and your colleagues, please feel free to send an email to Sofie Burgos-Thorsen at sofie@invi.nu.

Tess Skadegård Thorsen (PhD, AI & Tech Ethics Expert)

Tess is a researcher, lecturer, and consultant specializing in representation in technology and media. With a background in ESG and CSR consulting, she has built her career around socially sustainable products and responsible technology development. In 2022, she received the Kraka Award for Danish gender research. Her work has been published internationally, and she has taught at institutions such as Columbia University, Pennsylvania State University, Roskilde University, Aalborg University, Lund University, and the University of Copenhagen. Tess has been a fellow at the NYU Institute for Public Interest Technology and was previously Director of Product Ethics at Comcast NBCUniversal. She is a member of the advisory board for the Danish Pioneer Centre for AI. She is currently launching Gaard & Sekara, a consulting firm focusing on AI auditing, fairness, and robustness.